Connectors (Beta)

Overview

Welcome to the GroveStreams Connector Help Page! Here, you'll find detailed information on GroveStreams Connectors. Many devices can be programmed to use the GroveStreams HTTP and MQTT APIs, but sometimes programming isn't an option. That's where Connectors come in.A GroveStreams Connector:

- Accepts Data: Accepts incoming data from devices, sensors, gateways, or other cloud platforms in their proprietary format.

- Transforms Data: Converts the collected data into a required GroveStreams data format.

- Inserts Data: Inserts the data into GroveStreams component streams.

Many devices and other platforms have the ability to "push" data to another platform. Some sites refer to this as a webhook. GroveStreams has hard-coded the ability to accept data from several company's webhooks. See our How-To directions here:

Instead of us writing code and maintaining each company's webhooks, we've given you the ability to design your own connector to handle incoming calls.

The HTTP GS SQL Connector

We anticipate many connectors and processors in the future, but today, there is only one type of connector, the HTTP GS SQL connector. The HTTP GS SQL Connector:

- Accepts incoming HTTP(S) calls. Each connector can process many incomming calls from many sources.

- Transforms Data using GS SQL

- Inserts Data into streams using the GS SQL INSERT OR REPLACE INTO statement

Common GS SQL Parsing Functions used by Connectors:

The GS SQL Connector is available for all pricing plans. Customers with plans that do not include GS SQL will not be able to make SELECT calls to existing stream data. Only parsing functions and INSERT will be available.

Getting Started

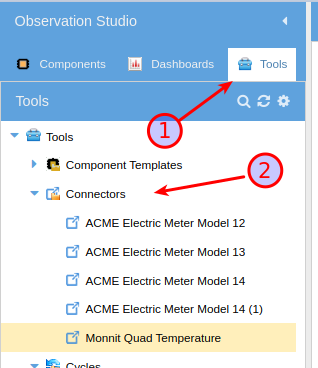

1. Sign-in to GroveStreams, enter the studio, choose the Tools tab on the left side of the studio.

2. Right-click Connectors and select New - Connector

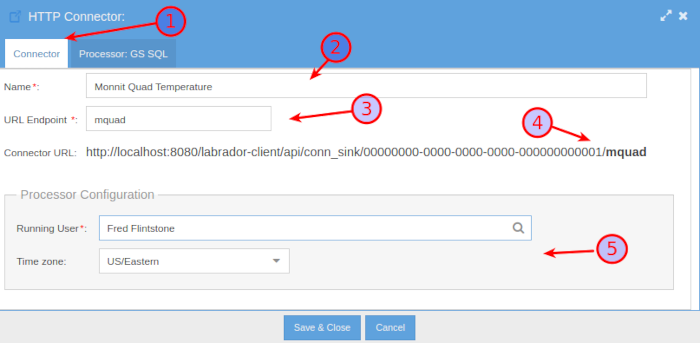

1. Select the Connector tab.

2. Enter a name for the connector

3. Enter a URL Endpoint. The Endpoint must be unique within the organization. The URL Endpoint will be appended to the connector sink URL that appears below it.

4. The connector sink URL is the URL that is used by external systems to push data to the connector. Enter this URL in the external system's webhook, or similar "push data" tool. Go to the studio toolbar Admin-Security-HTTP API Keys and select an existing key such as Connector URL Sink Key or create a new one. Click View Secret Key... and append that key onto the sink URL before using the URL from the source.

5. Select a user to run the connector as. The user's configured security is respected during the running of the connector. Enter a time zone to be used by the SQL.

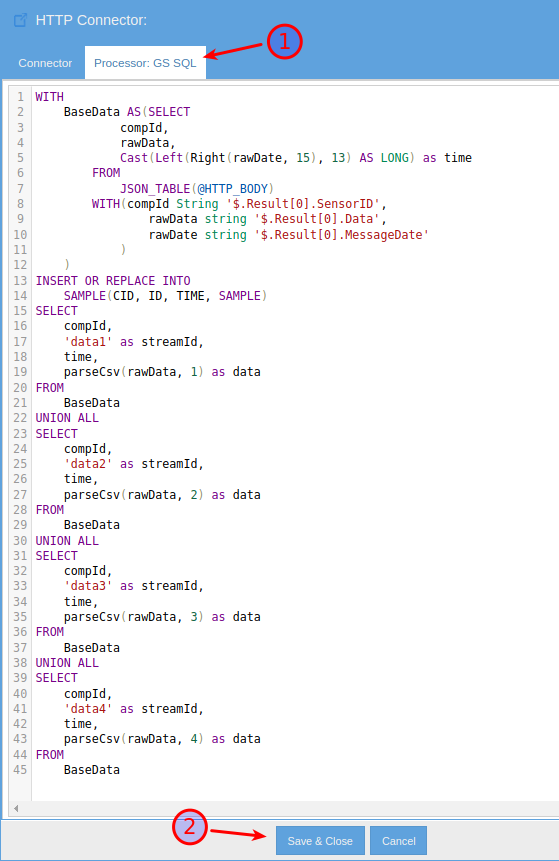

1. Select the Processor: GS SQL tab and enter the GS SQL that will transform and insert the incoming data into component streams. There are three system variables available to the parsing SQL:

- @HTTP_BODY: This is the HTTP Entity, or body, that was sent with the webhook. This could be blank, or NULL, if the caller's HTTP method is GET or they didn't include a body.

- @HTTP_QUERY_STRING: This is the query string part of the calling URL. The part after the question mark. It is URL decoded before it is set.

- @HTTP_METHOD: The caller's HTTP method: PUT, POST, GET, or DELETE

The above example processes an iMonnit webhook for the Quad Temperature sensor. The following JSON is sent by the webhook and is accessed via the @HTTP_BODY SQL variable:

{ "Method": "SensorDataMessages", "Result": [ { "DataMessageGUID": "7a19c032-1a36-462b-9e48-xxxxxxxxxxxx", "SensorID": 751000, "MessageDate": "/Date(1649721230000)/", "State": 0, "SignalStrength": 75, "Voltage": 3.46, "Battery": 100, "Data": "28.5, 23.8, 17.5, 13", "DisplayData": " Condenser Coil 83.3° F, Return Air 74.8° F, Evaporator Coil 63.5° F, Conditioned Air 55.4° F", "PlotValue": "83.3", "MetNotificationRequirements": false, "GatewayID": 974384, "DataValues": "28.5|23.8|17.5|13", "DataTypes": "TemperatureData|TemperatureData|TemperatureData|TemperatureData", "PlotValues": "83.3|74.84|63.5|55.4", "PlotLabels": "Fahrenheit|Fahrenheit|Fahrenheit|Fahrenheit" } ] }

The GS SQL Statement

Most Connector SQL statements will not be this complicated. We wanted to include an example of parsing a more complicated JSON object. DataValues arriving as a delimited string is what makes it complicated. The data does not have to be JSON. It can be any string including CSV strings.

WITH

BaseData AS(SELECT

compId,

rawData,

Cast(Left(Right(rawDate, 15), 13) AS LONG) as time

FROM

JSON_TABLE(@HTTP_BODY)

WITH(compId String '$.Result[0].SensorID',

rawData string '$.Result[0].Data',

rawDate string '$.Result[0].MessageDate'

)

)

INSERT OR REPLACE INTO

SAMPLE(CID, ID, TIME, SAMPLE)

SELECT

compId,

'data1' as streamId,

time,

parseCsv(rawData, 1) as data

FROM

BaseData

UNION ALL

SELECT

compId,

'data2' as streamId,

time,

parseCsv(rawData, 2) as data

FROM

BaseData

UNION ALL

SELECT

compId,

'data3' as streamId,

time,

parseCsv(rawData, 3) as data

FROM

BaseData

UNION ALL

SELECT

compId,

'data4' as streamId,

time,

parseCsv(rawData, 4) as data

FROM

BaseData

Example Overview

1. The Monnit sensor sends a reading to the Monnit cloud. A Monnit webhook is configured to "push" the sensor's JSON data to the configured GroveStreams Connector's sink URL2. The connector uses GS SQL to process the incoming JSON:

- The WITH statement declares a SELECT statement that is reused four times below and makes reading the SQL easier.

- The JSON_TABLE function parses the JSON from the @HTTP_BODY variable.

- The JSON_TABLE WITH statement extracts the component ID, sensor data (4 values delimited) and the time.

- The SQL function, parseCsv parses the delimited data string into 4 parts.

- The four temperature values are combined into one INSERT statement by using four SELECT statements with UNION ALL statements.

- The INSERT OR REPLACE INTO statements will insert the data into component streams matching the component ID and stream IDs. The WITH statement can also include a component template ID and a folder path for new components.

Simple JSON SQL Example

An example of parsing a simple JSON structure. This can be run from the Studio's GS Query Editor and Tester via Toolbar-Admin. It is setting the @HTTP_BODY variable which is a great way to test and troubleshoot a connector's SQL.

SET @HTTP_BODY = '{

"compId": "comp1",

"streamId": "stream1",

"data": 120.2,

"time": 1738908000000

}';

INSERT OR REPLACE INTO

SAMPLE(CID, ID, TIME, SAMPLE)

SELECT CID, ID, TIME, DATA

FROM

JSON_TABLE(@HTTP_BODY)

WITH(CID String '$.compId',

ID String '$.streamId',

DATA String '$.data',

TIME Long '$.time'

);

Simple CSV SQL Example

An example of parsing a simple CSV structure. This can be run from the Studio's GS Query Editor and Tester via Toolbar-Admin. It is setting the @HTTP_BODY variable which is a great way to test and troubleshoot a connector's SQL.

SET @HTTP_BODY = '"comp1","stream1","2021-04-01T05:00:00.000",24

"comp1","stream1","2021-04-01T06:00:00.000",23

"comp1","stream1","2021-04-01T07:00:00.000",22';

INSERT OR REPLACE INTO

SAMPLE(CID, ID, TIME, SAMPLE)

SELECT CID, ID, TOEPOCHMILLIS(STRING_TIME, 'yyyy-MM-dd''T''HH:mm:ss.SSS') as TIME, DATA

FROM

CSV_TABLE(@HTTP_BODY)

WITH (

1 CID STRING,

2 ID STRING,

3 STRING_TIME STRING,

4 DATA LONG)

Simple Query String parsing SQL Example

An example of extracting the component and stream ID from the URL query string. This can be run from the Studio's GS Query Editor and Tester via Toolbar-Admin. It is setting the @HTTP_QUERY_STRING variable which is a great way to test and troubleshoot a connector's SQL.The component and stream ID are part of the URL query string in this example. The function PARSECSV is used to split the query string and return the substring at the requested one-based index. The substrings are placed into a variables @STATION and @READINGS. The variables are used within the INSERT SELECT statement.,

SET @HTTP_QUERY_STRING = 'station=12845&readings=temp';

SET @HTTP_BODY = '"2021-04-01T05:00:00.000",24

"2021-04-01T06:00:00.000",23

"2021-04-01T07:00:00.000",22';

SET @STATION = PARSECSV(PARSECSV(@HTTP_QUERY_STRING, 1, '&'), 2, '=');

SET @READINGS = PARSECSV(PARSECSV(@HTTP_QUERY_STRING, 2, '&'), 2, '=');

INSERT OR REPLACE INTO

SAMPLE(CID, ID, TIME, SAMPLE)

SELECT @STATION, @READINGS, TOEPOCHMILLIS(STRING_TIME, 'yyyy-MM-dd''T''HH:mm:ss.SSS') as TIME, DATA

FROM

CSV_TABLE(@HTTP_BODY)

WITH (

1 STRING_TIME STRING,

2 DATA LONG)